An Empirical Analysis of Secure Federated Learning for Autonomous Vehicle Applications

An Empirical Analysis of Secure Federated Learning for Autonomous Vehicle ApplicationsAbstract

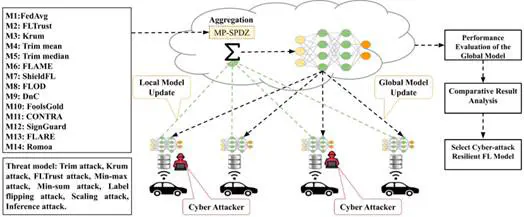

Federated Learning lends itself as a promising paradigm for enabling distributed learning in autonomous vehicle applications, ensuring data privacy while enhancing predictive model performance through collaborative training on edge client vehicles. However, it remains vulnerable to various categories of cyber-attacks, necessitating more robust security measures to effectively mitigate potential threats. Poisoning and inference attacks are commonly initiated within the federated learning environment to compromise system security. Secure aggregation can limit the disclosure of sensitive information from both outsider and insider attackers. This study conducts an empirical analysis on the transportation image dataset (e.g., LISA traffic light) using various secure aggregation techniques and multiparty computation in the presence of diverse cyber-attacks. Multiparty computation serves as a state-of-the-art security mechanism, providing privacy-preserving aggregation of local model updates from autonomous vehicles through multiple security protocols. The findings demonstrate how adversaries can mislead autonomous vehicle models, causing traffic light misclassification and potential hazards. This study explores the resilience of different secure federated learning aggregation and multiparty computation methods in safeguarding autonomous vehicle applications against cyber threats during both training and inference phases.